So Sick: Threat Hunting and Data Engineering with Pattern Matching

The security industry has a remarkable talent for taking simple problems and wrapping them in increasingly complex solutions. While everyone’s busy adding “AI-powered” to their product descriptions, three algorithms from the 1970s have been quietly working in the background showing the ‘70s may have been rough for fashion, but it was a golden era for the future of Data Engineering and Threat Hunting.

Bloom filters (1970) showed us that “probably” can be better than “definitely.” Knuth-Morris-Pratt (1977) proved that sometimes the fastest way to find something is to know where not to look. And Aho-Corasick (1975) demonstrated that looking for multiple patterns doesn’t require reading your data over and over again.

These algorithms have been solving real problems since before most of us were born (and if you remember when they were published, congratulations on that retirement portfolio). They’ll probably still be working long after the current AI hype cycle has passed.

The Pattern Matching Problem: Where Chaos Meets Order

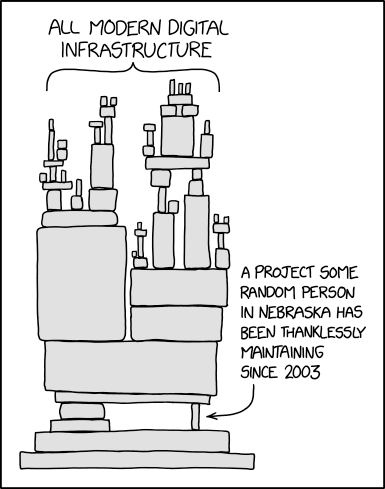

Every security tool thinks it knows the “right” way to format data. EDRs think they send pristine JSON, your firewall spits out whatever format that intern conceived in 2003, and the custom appliance someone built in Nebraska — does it even generate logs?

Pattern matching is about making sense of this chaos. I’ve often half-joked that a good threat hunter could find threats without a SIEM, and this is why. Whether you’re parsing logs, hunting threats, or normalizing data, you’re solving one core problem: how do you efficiently extract meaning from semi-structured chaos?

Abstract Tree, Oh Abstract Tree, How Lovely Are Your Branches

When someone says they’ve “structured” their data, they’re usually just hiding unstructured chaos behind a JSON facade {because adding curly braces makes everything better}. That’s where Abstract Syntax Trees come in — they’re the difference between actually understanding your data and just pretending to.

In data engineering and threat detection, we often deal with complex and nested, data formats. Abstract Syntax Trees (ASTs)provide a way to bring order to this chaos by organizing data into a tree structure that captures both content and relationships. ASTs are essential for parsing structured or semi-structured data, allowing us to understand how different components of data relate to each other in a hierarchical form, rather than a flat sequence.

How ASTs Work

An AST represents the syntax of a piece of data as a tree, where each node corresponds to a structural component — such as a field, operator, or value — and each branch shows the relationship between these components. For example, in a log entry, you might have a root node representing the log itself, with child nodes for attributes like “timestamp,” “event type,” and “details.” Each of these attributes might also have their own child nodes, creating a nested structure that mirrors the data’s organization.

In data streams with nested or complex formats, ASTs can process and categorize fragments of information as they arrive, preserving relationships without needing the entire data structure loaded in memory. For high-volume streaming detection they are especially useful for. structural relationships between data elements, like source IPs and outcomes, are essential for identifying threat patterns

Heres a JSON log format:

{

"timestamp": "2024-01-20T03:14:15Z",

"event": {

"type": "authentication",

"outcome": "failure",

"details": {

"user": "admin",

"source_ip": "192.168.1.1"

}

}

}Which ends up looking something more like this in an AST:

Sure, it looks pretty in diagrams, and my bar napkin sketch should be in the Louvre, but there’s is a problem to this solution — building and traversing these trees is computationally expensive. When you’re processing millions of events per second, every microsecond spent navigating branches matters, so where they are applied matters.

However, ASTs are invaluable in situations where understanding the relationships between data elements is as important as the elements themselves. By parsing data into an AST, you’re not just processing isolated fields; you’re building a structural model that can be navigated, queried, and transformed. This is particularly useful in threat detection and hunting, where relationships between fields (like a user’s IP associated with a failed login attempt) might indicate potential threats.

So enters our three algorithms — each solving a different piece of the pattern matching puzzle without requiring a PhD in computer science or a venture capital round to implement.

Middle-aged Algorithms For Middle-aged Security Analysts

At hundreds of millions or trillions of events per day, you need different tools for different jobs. Like any good toolkit, each algorithm solves a specific problem. This is one thing our Detection Engineering team has spent a lot of time thinking through — it’s not streaming vs batch, it’s about what do you need to find, then where. And unlike the latest AI solution that promises to solve everything (while actually solving nothing), these algorithms do exactly what they claim — no marketing budget required.

Bloom Filters (1970): The Art of Intelligent Uncertainty

Bloom filters are great when “meh” is good enough and answer one question really well: “Have I definitely never seen this before?” Think of it as a bouncer who’s absolutely certain about who’s not on the list but might occasionally let someone slip through. In security, this kind of intelligent uncertainty turns out to be surprisingly useful.

Bloom filters work by using a bit array and a few hash functions to store “maybes” efficiently. Each potential match gets hashed to several positions in the bit array, which are marked as “1.” When a new item comes along, the Bloom filter hashes it and checks those positions — if all the bits are marked, it says, “Sure, close enough.” But if even one bit is a zero, it’s an instant pass.

The trade-off? Bloom filters are lightning-fast and memory-efficient, but they aren’t perfect. They’ll never miss a real threat, but they might occasionally raise a false alarm. For large-scale threat detection where you need to quickly eliminate obvious non-threats, that’s a perfectly acceptable compromise.

class BloomFilter:

def __init__(self, size, hash_count):

self.size = size

self.hash_count = hash_count

self.bit_array = [0] * size

def add(self, item):

for i in range(self.hash_count):

result = hash(str(item) + str(i)) % self.size

self.bit_array[result] = 1

def check(self, item):

for i in range(self.hash_count):

result = hash(str(item) + str(i)) % self.size

if self.bit_array[result] == 0:

return False # Definitely not in set

return True # Possibly in setOne Unique Feature of Bloom Filters: Speed with Certainty of “No”

One standout quality of Bloom filters is their ability to provide a definitive “no” at lightning speed. If an item isn’t in the set, the Bloom filter guarantees it with absolute certainty and minimal resource usage. This makes them incredibly efficient for scenarios where you need to quickly eliminate non-matches from massive datasets. You gain unmatched speed and memory efficiency, as long as you’re okay with occasionally double-checking a “maybe.”

Aho-Corasick (1975): Parallel Processing Before It Was Cool

In 1975, Mozambique, Cape Verde and Sao Tome and Principe achieved independence from Portugal, the Vietnam War ended, and Alfred Aho and Margaret Corasick were inventing a way to search for multiple patterns simultaneously. (PoliSci makors now working in tech — rise up!) Their algorithm builds a fancy state machine (like a really efficient game of connect-the-dots) that lets you find all matching patterns in a single pass through your data. This magical ability comes down to failure links, which ensure that when a mismatch happens, you don’t have to start your search all over from the top. Instead, failure links let you skip to the next most probable position in the trie, saving time (and CPU cycles).

Think of failure links as a smart way of saying, “Yeah, we didn’t find ‘badpattern1’ here, but there’s still hope for ‘badpattern2.’” Rather than throwing your hands up at every mismatch, Aho-Corasick says, “I’ll pick up where I left off.”

How Failure Links Work

Imagine you’re searching for the patterns abc, abx, and bc in the text. As you build the trie, failure links let each node know where to “fall back” if a match isn’t found at a given point in the search. Here’s a quick rundown of how it works:

- Constructing the Trie: Each character in your patterns becomes a node in the trie. For abc, you’d create a path a -> b -> c, and similarly for abx and bc.

- Building Failure Links: Now, here’s where the failure links come in. If you’re on a -> b and realize c doesn’t match, Aho-Corasick follows a failure link back up the trie to the longest suffix that matches a prefix of another pattern.

So if ab didn’t lead to abc, the algorithm can fall back to bc instead, which might match.

Let’s put this into code to make it clearer:

from collections

import deque

class AhoCorasick:

def __init__(self):

self.trie = {} # Our main trie structure

self.fail_links = {} # Failure links for efficient backtracking

def add_pattern(self, pattern):

node = self.trie

for char in pattern:

node = node.setdefault(char, {})

node['output'] = pattern

def build_failure_links(self):

queue = deque()

# Step 1: Initialize failure links for the root level

for char, node in self.trie.items():

node['fail'] = self.trie

queue.append(node)

# Step 2: Build failure links for other levels

while queue:

current_node = queue.popleft()

for char, next_node in current_node.items():

if char in ['fail', 'output']:

continue # Skip failure and output entriea

# Set up failure link for next_node

fail_state = current_node.get('fail', self.trie)

while fail_state and char not in fail_state:

fail_state = fail_state.get('fail', self.trie)

# Update failure link if possible

next_node['fail'] = fail_state[char] if fail_state else self.trie

queue.append(next_node)

def search(self, text):

matches = []

node = self.trie

for i, char in enumerate(text):

# Follow failure links if no match in the current node

while node and char not in node:

node = node.get('fail', self.trie)

node = node.get(char, self.trie

# If there’s an output, it’s a match

if 'output' in node:

matches.append((i, node['output']))

return matchesWhy Failure Links Matter

Without failure links, every mismatch would send Aho-Corasick back to square one. Imagine searching for patterns like google, goggle, and googel without failure links — each mismatch would make the algorithm trudge back to the start. With failure links, it simply hops to the longest viable suffix, sparing your CPU the existential crisis of “Did I mess up again?” Save that sort of thinking for your inner monologue at 2 AM.

KMP (1977): Sometimes Knowing Where Not to Look is Half the Battle

Knuth, Morris, and Pratt figured out that when you’re looking for something specific, knowing where it can’t be is just as valuable as knowing where it might be. Their algorithm remembers what it’s already seen, so it doesn’t waste time checking the same things twice.

Knuth, Morris, and Pratt had a thought: When you’re looking for something specific, knowing where it can’t be is just as useful as knowing where it might be. It doesn’t work for finding your wallet but KMP optimizes pattern matching by skipping over parts of the text that it already knows don’t match, thanks to a handy little structure called the partial match table (or failure table).

Instead of re-checking characters you know are wrong, it lets you jump ahead and keep searching, skipping the parts you already know won’t lead to a match. KMP is a one-track mind — it’s after one pattern, and one pattern only — but it does it with laser focus and a short memory for distractions.

How the Partial Match Table Works

The partial match table tells KMP where to resume its search after a mismatch, based on what it’s already seen. It works like this:

- Building the Table: The table records the longest prefix of the pattern that also happens to be a suffix. This is like saying, “Hey, I’ve seen this part of the pattern before, so let’s skip the guesswork next time.”

- Using the Table to Skip Ahead: When a mismatch occurs, KMP uses the table to figure out the next best place to pick up the search, without backtracking. This way, it doesn’t waste time checking characters that it already knows aren’t a match.

Here’s how this works in code:

def build_kmp_table(pattern):

# Create the partial match table (failure table)

table = [0] * len(pattern)

j = 0 # Length of previous longest prefix suffix

# Iterate over the pattern to fill in the table

for i in range(1, len(pattern)):

while j > 0 and pattern[i] != pattern[j]:

j = table[j - 1] # Fall back to a shorter prefix

if pattern[i] == pattern[j]:

j += 1

table[i] = j # Record the length of the longest prefix suffix

return table

def kmp_search(text, pattern):

# Get the partial match table for the pattern

table = build_kmp_table(pattern)

j = 0 # Index for pattern

matches = []

# Go through the text to search for the pattern

for i in range(len(text)):

# Fall back as necessary if characters don’t match

while j > 0 and text[i] != pattern[j]:

j = table[j - 1] # Skip ahead based on the table

if text[i] == pattern[j]:

j += 1

if j == len(pattern): # Full match found

matches.append(i - j + 1)

j = table[j - 1] # Prepare for the next potential match

return matchesHow the Partial Match Table Saves Time

Suppose you’re searching for the pattern ABABC in the text ABABABABC. KMP (and its partial match table) makes this faster by:

- Starting Strong: You get to ABAB in both the text and the pattern. KMP’s hopeful: we’re halfway there!

- Mismatch Moment: You hit a C in the pattern but a B in the text. Now, without the partial match table, you’d have to go back to the start, but KMP’s smarter than that. The partial match table says, “Chill, bruh. You don’t need to check from the beginning. Just pick up where the last prefix match left off.”

- Jumping Ahead: The table tells KMP to skip straight to the last AB it saw and resume from there, rather than wasting time on characters we know won’t lead to a match.

- Finding the Match: Thanks to the partial match table, KMP quickly finds ABABC without revisiting the same characters over and over. This efficiency is what makes KMP so powerful for single-pattern searches, especially when the pattern or text is long.

Why KMP’s Partial Match Table Matters

Without the partial match table, KMP would be just another brute-force algorithm, revisiting characters and second-guessing itself. But with the table, it becomes a focused, efficient searcher, only revisiting characters when absolutely necessary. Imagine trying to find a particular phrase in a novel, but every time you got to the wrong chapter, you had to start from page one. KMP’s partial match table is like a bookmark that remembers where you left off, saving you time and mental energy (or in this case, CPU cycles).

When (and When Not) to Use KMP

KMP is your go-to for single-pattern searches where backtracking would otherwise kill performance. It’s fast and precise, but remember — it’s not built for multiple patterns (that’s Aho-Corasick’s territory).

Putting It All Together

Each algorithm shines in its own way:

- Bloom filters eliminate the impossible quickly

- Aho-Corasick handles multiple patterns efficiently

- KMP finds exact matches without wasting cycles

The trick isn’t picking the “best” one — it’s knowing when to use each. Sometimes you need Bloom filters’ speed, sometimes you need Aho-Corasick’s thoroughness, and sometimes you need KMP’s precision.

Conclusion

History has a way of repeating itself, and I still find myself using these algorithms every week. They do one thing, do it well, and don’t need a neural network to get the job done.

In the world of data engineering and threat hunting, sometimes the most reliable tools are the ones that have been quietly doing their job for decades. The best solutions don’t always need to be the newest — they just need to work.